Kiev. Ukraine. Ukraine Gate – January 6, 2021 – Technology

OpenAI specialists have developed two neural networks at once. One of them is able to draw any pictures from a text description, while the other can identify objects and classify them based on the description.

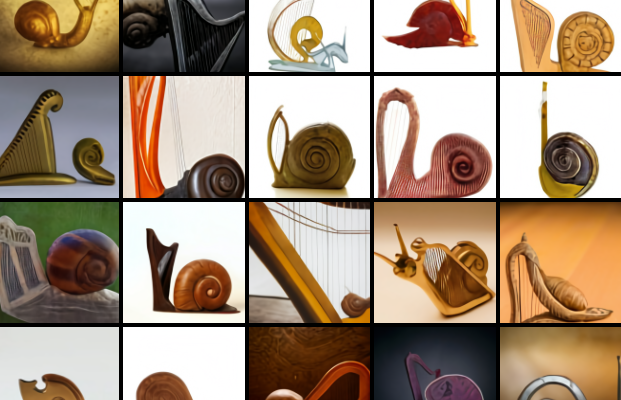

The neural network, which was taught to draw pictures according to the description, was called DALL-E. Interestingly, the computer does not search for descriptions of images on the Internet, but creates them yourself. For example, here’s what DALL-E gives on request “chair in the shape of an avocado.”

However, it should be noted that all requests must be entered in English, as only its neural network is capable of processing.

As for the second neural network represented by OpenAI, it was called CLIP. It is designed to classify the picture by description. By the way, the description must be in English.